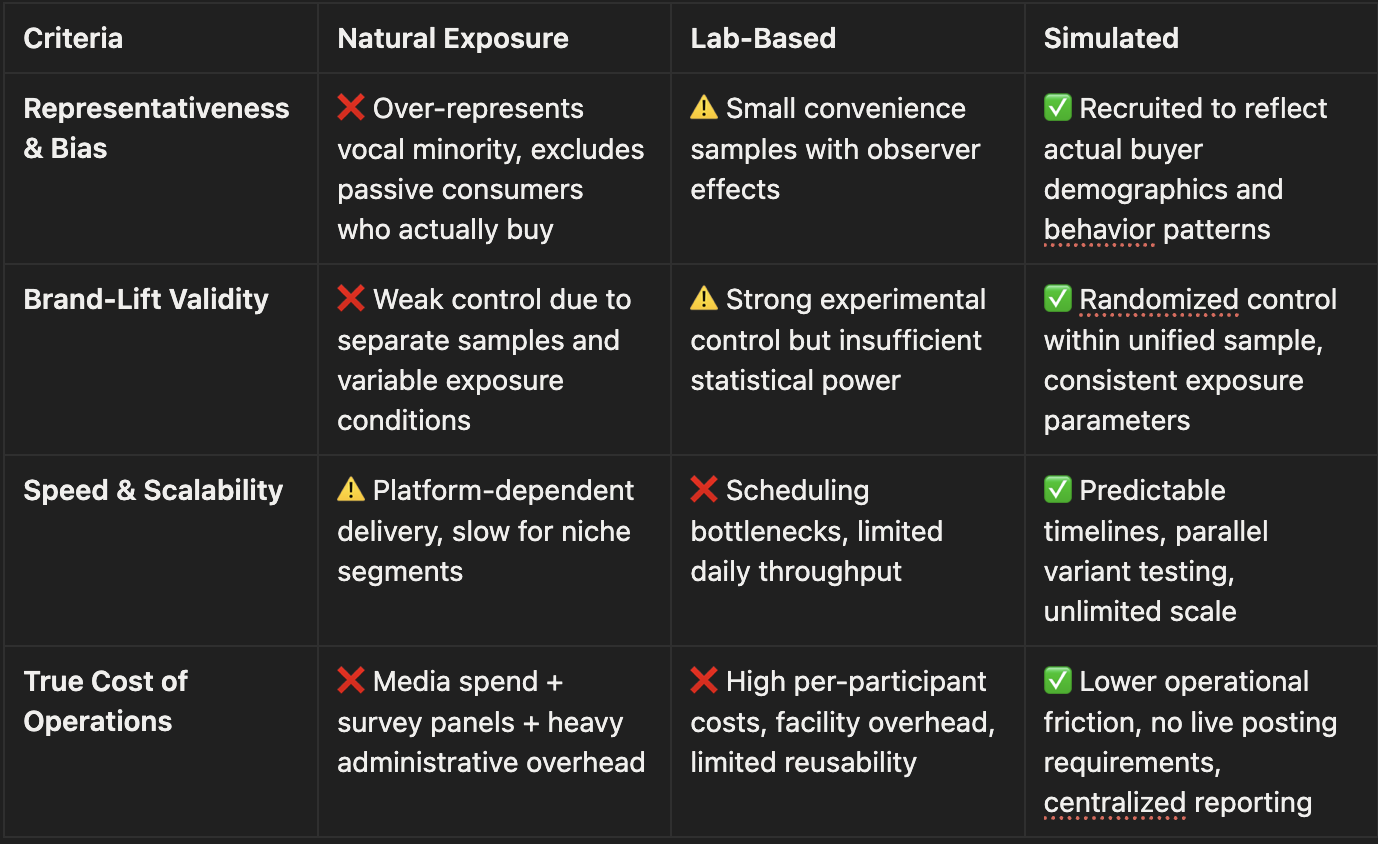

Marketing teams face a recurring choice: test creative and measure impact in the wild (natural exposure), in controlled labs, or in simulated environments that replicate real platforms at scale. Each approach promises different insights, but only one delivers the truth about what people feel and remember.

This isn't about what people say, it's about what their brains do in those critical milliseconds when real brand impact happens.

Natural exposure looks efficient because your content runs where it will eventually live. But here's what traditional research won't tell you: platform algorithms don't optimize for representativeness. They optimize for engagement.

The Vocal Minority Problem

The Silent Scrollers Gap

The Over-Optimization Trap

Real Example: Two video cuts perform similarly on likes and comments. In simulated testing, Cut A drives 12-15% higher ad recall and emotional connection among passive viewers, who are actual buyers. Natural exposure would have led you to pick the weaker creative or suggest that the campaign performed better, or worse, than it actually did.

Labs bring participants into controlled settings where they navigate real platforms while researchers observe behavior. The trade-offs are significant:

Strengths:

Critical Limitations:

Bottom line: Valuable for deep diagnostics, but not for fast, scalable creative optimization.

Natural exposure runs ads live on platforms and measures through engagement signals. While this mirrors actual delivery, it creates more problems than it solves:

Even providers who claim to run "natural-first" research routinely fall back on simulated environments to meet deadlines and fill quotas. Here's why:

The result: Most natural exposure projects get supplemented with simulation anyway. Planning for simulation from the start gives you better control and outcomes.

Simulated environments combine the realism of actual platforms with the scientific rigor research demands. You recruit target audiences, assign them to controlled conditions, and measure what truly drives decisions.

System 1 Signals (What Drives Decisions):

Actionable Outcomes:

Time and attention are your scarcest resources. Simulated environments reduce overhead across the board:

For Creators:

For Agencies:

For Stakeholders:

Those saved cycles compound into better creative outcomes—which usually delivers more impact than one slow, "perfect" study.

1. Define Success Metrics Prioritize System 1 signals: recall, emotional connection, and intent alongside attention metrics.

2. Set Sampling Rules Recruit actual buyers and category users, not just heavy platform engagers.

3. Control for Clean Insights Standardize frequency, sequencing, and context to isolate creative effects.

4. Test Variants in Parallel Run A/B/C tests to maximize learning per fielding cycle.

5. Close the Feedback Loop Feed insights into creative revisions, then verify improvements with rapid re-testing.

6. Complement Strategically Add targeted lab sessions for qualitative depth on winning concepts. Monitor natural exposure once live for media optimization.

"Simulation isn't realistic enough." High-fidelity simulated feeds mirror layout, pacing, ad load, and natural interruptions. For measuring what people feel and remember, the gain in scientific control and sample quality far outweighs minor differences from live delivery.

"We need platform engagement signals." Keep them for in-flight optimization. Use simulation to confirm creative actually persuades and creates lasting memory. Then let platform algorithms distribute it efficiently to the right audiences.

"Our stakeholders expect 'in-market' data." Give them both: simulation for causal brand-lift measurement, plus lightweight natural exposure for directional engagement validation. This hybrid approach reduces wasted spend while accelerating learning cycles.

Here's what traditional research can't give you: context. With simulated environments, you control the survey instrument, which means you can build comprehensive benchmarks across platforms, industries, and markets.

What This Unlocks:

This isn't just measurement—it's competitive intelligence that informs strategy. Natural exposure can't deliver this because each platform uses different metrics. Lab testing can't scale it. Only controlled simulation gives you consistent, comparable data that builds into a strategic asset.

If your goal is to create content that doesn't just perform but truly connects, simulated environments reveal what others miss. Natural exposure overweights the vocal few and muddies brand-lift with fragmented data. Lab testing adds valuable depth but can't scale insights across variants and audiences fast enough.

Transform your measurement approach:

Phase 1: Foundation (Month 1)

Phase 2: Integration (Months 2-3)

Phase 3: Optimization (Ongoing)

Your Implementation Roadmap:

The compound effect: Every simulation builds your competitive intelligence. Every benchmark gives you strategic advantage. Every insight drives better creative decisions.

Ready to stop guessing and start knowing what moves your audience? Your benchmark-driven measurement transformation starts with one simulation.

[Start Your Benchmark Study] → [Book Strategy Call] → [View Platform Demo]